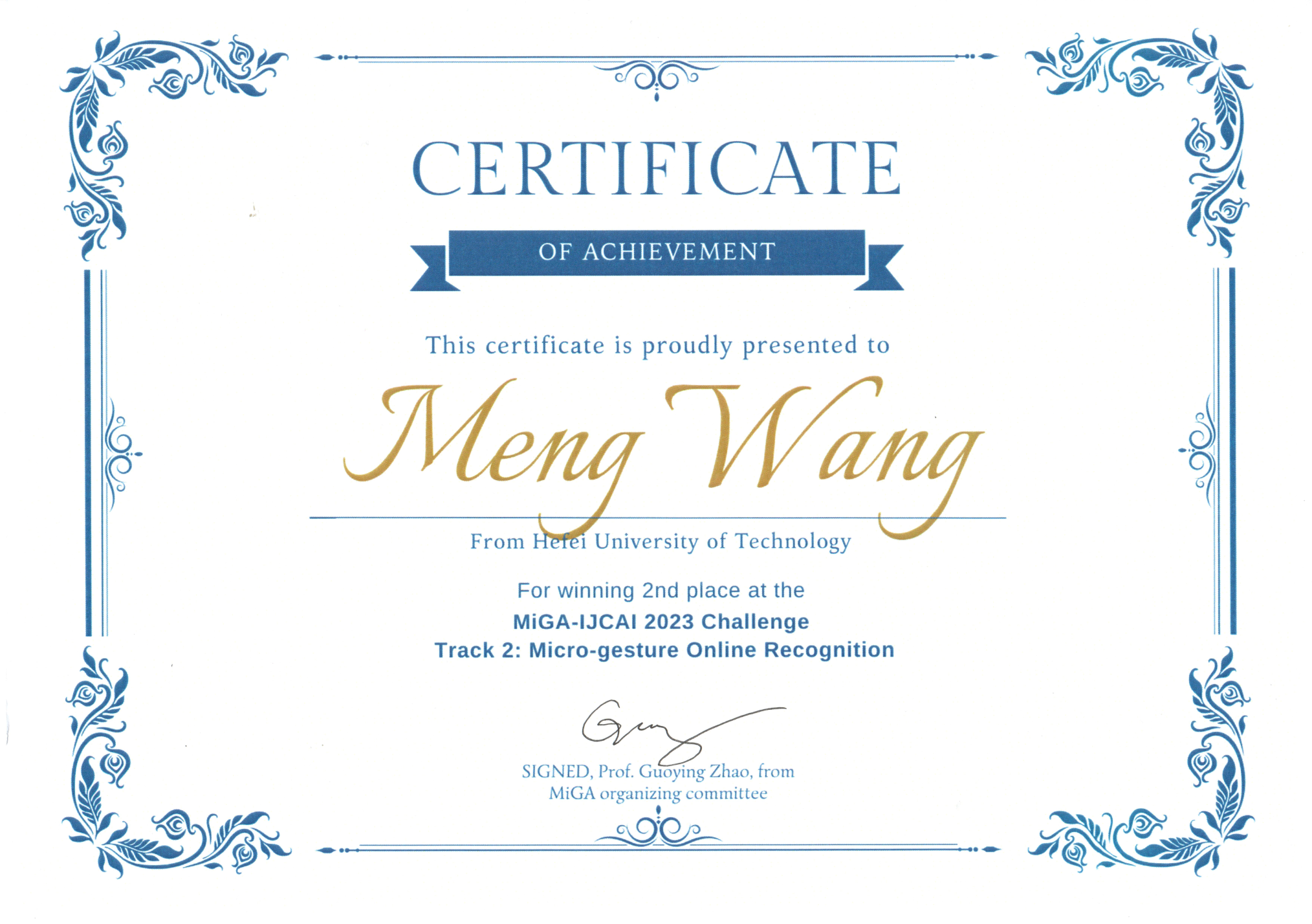

The 2023 International Joint Conference on Artificial Intelligence (IJCAI 2023) was held in Macau, China on August 19, 2023. The results of the 2023 Micro-gesture Analysis and Emotion Understanding Challenge (The 1st Workshop & Challenge on Micro-gesture Analysis for Hidden Emotion Understanding) were announced at the conference. The team of teachers and students from the Multimedia Computing Laboratory of Hefei University of Technology participated in this event for the first time and won the championship in the micro-gesture recognition track and the runner-up in the online micro-gesture detection.

The International Joint Conference on Artificial Intelligence is a top-tier international conference certified by the China Computer Federation (CCF). It started in 1969 and is one of the most influential conferences in the field of artificial intelligence in the world. The International Micro-gesture Analysis and Emotion Understanding Challenge is the first challenge hosted by IJCAI, jointly initiated by universities and research institutes such as the University of Oulu in Finland, the University of Augsburg in Germany, Stanford University in the United States, and the Institute of Psychology of the Chinese Academy of Sciences. It is reported that this MiGA competition is divided into two tracks, attracting nearly a hundred teams from universities and research institutions around the world to register for the competition, including top teams from Stanford University, the University of Science and Technology of China, Northwestern Polytechnical University, and others. The challenge requires contestants to distinguish 32 types of micro-gesture actions that are weak and highly similar based on the provided skeleton point data, which is a very challenging task.

The team from the Multimedia Computing Laboratory was led by Professor Wang Meng, under the guidance of Professor Guo Dan, and consisted of postgraduate students such as Li Kun, Chen Guoliang, and Peng Xinge. They won the championship in the micro-gesture recognition track and the runner-up in the online micro-gesture detection. In response to the small changes in micro-gesture actions, they proposed a new algorithm that combines label semantics and visual feature semantics, which can effectively distinguish different categories of micro-gesture features. The team finally achieved a performance nearly 40% higher than the official benchmark model, realizing accurate recognition and detection of highly similar micro-gestures. The team published a paper at the conference and introduced their related work on site.

TOP

TOP